Recently I published a web app named Face Ventura Pet Detector that uses facial recognition to find pets that look like a user’s uploaded photos. This post describes how it all works.

What is the facial recognition algorithm it uses?

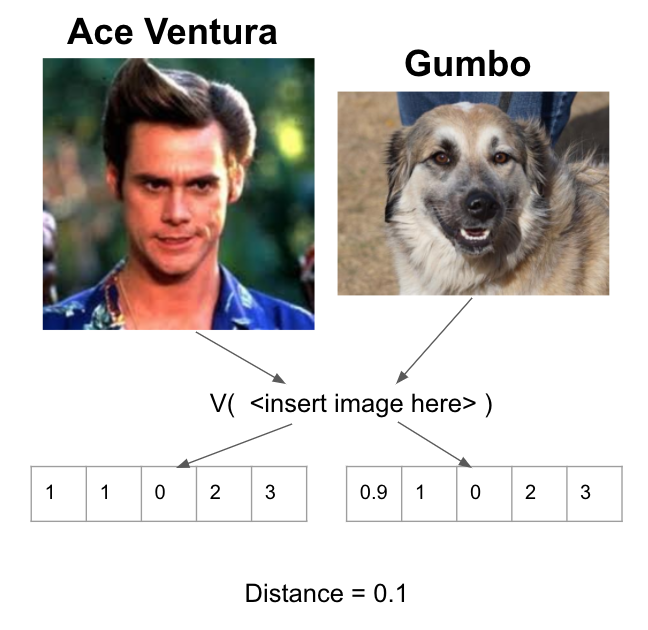

At its simplest, the algorithm takes an image, $I$, and produces a vector, $V(I)$. If we do this to two images, one from a user, $I_{\mbox{user}}$, and one from a pet, $I_{\mbox{pet}}$, we can compare the images in terms of their vectors:

$$ \mbox{Distance}(I_{\mbox{user}},I_{\mbox{pet}}) = \| V(I_{\mbox{user}} ) - V(I_{\mbox{pet}}) \| $$

Once the distance between a user and a set of pets has been calculated, the distances can be sorted to find the closest pet to the user.

Visually, this process looks like this:

Why is this a natural way to think about facial recognition?

The key pieces of this algorithm are: (1) we’re using the same function $V$ to produce vectors from images, (2) we are most interested in the comparison between vectors, and (3) we can train $V$ to focus on two images at a time. This allows the network to focus on comparing two images, hopefully moving two images of the same face close together and two images from different faces further apart in the resulting vector space. This methodology has a couple properties that make it potentially suitable for facial recognition.

- If

$V$is a continuous function, then the same or very similar images will naturally be close together. If, for example,$I_{\mbox{user}} \approx I_{\mbox{pet}}$then$V(I_{\mbox{user}}) \approx V(I_{\mbox{pet}})$. Two different images of the same face therefore may have close vectors even without any training of$V$. - Because

$V$is only trained to differentiate between two different faces–rather than classify a face as a particular person–we don’t need to retrain$V$every time we encounter a new face. This method can therefore usefully find similarity in images/faces/pets that the network has never encountered previously.

Since the vector comparison is a key part of creating $V$, I have called this a comparison network. Other authors have called networks like this siamese networks.

The academic paper first showing this structure for facial recognition is Chopra, Hadsell and LeCun (2005). It discusses properties of this methodology in much greater detail.

How to train $V(\cdot)$

The function $V$ itself can be constructed like any neural network. Since our inputs are images, it is natural to use convolutional layers leading into dense layers to produce the final vector. The loss function is what is special for this application. It enables the network to focus on vector comparisons instead of classification (a more standard task). This loss is discussed in detail in Hadsell, Chopra and LeCun (2006). We re-write it here for clarity.

For two input images, $I_a$ and $I_b$, we define $D(I_a,I_b) = \| V(I_a) - V(I_b) \|$. If the images are representations of the same person, then

$$ L(I_a,I_b) = \frac{1}{2} D(I_a,I_b)^2 $$

If the two input images are not of the same person, then

$$ L(I_a,I_b) = \frac{1}{2} \max(0, m - D(I_a,I_b))^2 $$

where $m$ is a margin variable determining how far away the two vectors can be before they are sufficiently distant to not incur any loss.

Mathematically, there isn’t anything hugely special about this loss function. It simply encourages vectors from the same faces to be close and vectors from different faces to be further away.

Comparison Networks for other things

The structure of this network supports a fast and easy way to determine whether two photos have similar features. BUT, there’s no reason why this method is only applicable to faces (or pets for that matter). For example, the first appearance of this comparison structure is from Bromley, Guyon, LeCun, Säckinger, Shah (1994) where written signatures are analyzed. In addition, the Keras software package’s website provides an MNIST example. Since general comparison is an important method to analyze almost any data, I would expect this concept to provide further novel ways to approach problems in Machine Learning and AI.